July 7, 2025

.jpg)

It’s 12:00 a.m. and halfway across town, a network tower triggers internal sensors and alerts. Data traffic spikes due to the notorious 11.11 e-commerce sale. Congestion warnings blaze across dashboards. Normally, this would trigger a scramble across NOC teams— frantic calls to engineers, manual checks on base stations, long hours spent combing through logs and configs.

But tonight? The network takes care of itself.

An AI model — trained not just on language, but on the language of telecom — quietly diagnoses the bottleneck, predicts the optimal reconfiguration, and tweaks parameters in seconds. By morning, your customers keep streaming, scrolling, gaming — none the wiser.

This isn’t sci-fi. It’s the new frontier of TLMs: Telecom Large Models — and they’re set to become the nerve centers of tomorrow’s networks.

Let’s face it — generic large language models (LLMs) are brilliant. They write poems, code apps, even dabble in therapy. But hand them a telecom config file or ask them to predict cell site traffic? You’ll get elegant nonsense.

That’s because telecom is a different beast. It’s a world of alarms, architecture diagrams, frequency plans, regulatory minutiae, and SOPs stacked a mile high. Ordinary LLMs simply aren’t wired for it.

Enter TLMs and TLMs (Large Telco Models) — AI brains fine-tuned on the very DNA of telecom. SoftBank and Tech Mahindra recently unveiled these customized behemoths, built on Nvidia’s NeMo and NIM platforms, and trained on oceans of telco data — from network logs and design blueprints to alarm histories and operational playbooks.

Why does it matter? Because telcos are drowning in data. For decades they’ve collected it but struggled to use it smartly. Now, with TLMs, they can finally turn that haystack into a crystal ball.

Perhaps the most striking evolution is TSLAM-4B — a 4-billion parameter large action model purpose-built for telecom.

Forget generic training sets. TSLAM-4B was fed 427 million tokens of pure telecom data, painstakingly curated by 27 network engineers over 135 person-months. It’s designed to internalize everything from troubleshooting flows and vendor specs to compliance norms.

With its 128K token context window, TSLAM-4B can follow complex, multi-turn conversations, track deeply nested configs, and surface nuanced insights across sprawling datasets. Thanks to 4-bit quantization, it runs on standard GPUs — no need for monster data center rigs.

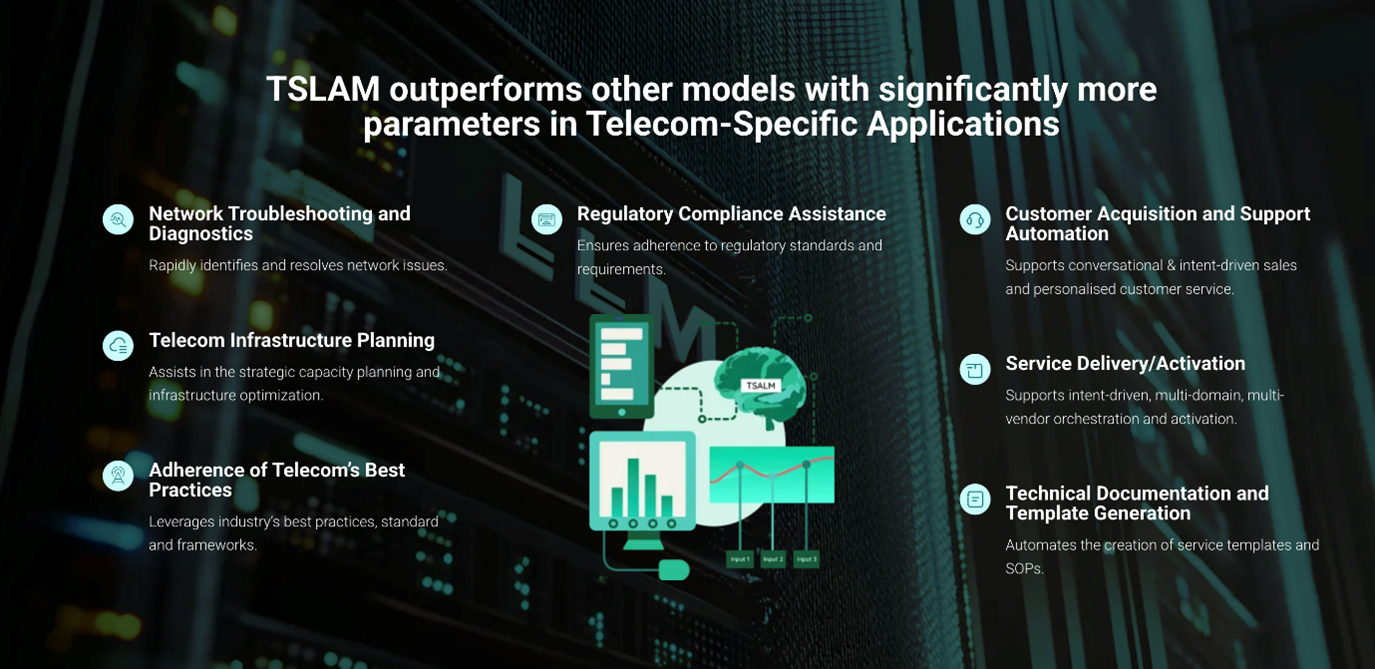

But what makes it revolutionary isn’t just its scale or efficiency. It’s what it can do:

This isn’t just comprehension — it’s action orientation, with the ability to recommend or even autonomously execute operational decisions.

TSLAM-4B isn’t alone. Across the industry, TLMs are already moving from labs to live networks.

For telcos, these aren’t just shiny toys. They’re operational lifelines.

And let’s not overlook the agentic AI angle: multi-agent systems built on these models can coordinate fixes across layers — transport, RAN, core — often without human intervention. That’s not just cost saving; it’s a survival edge in a hyper-competitive market.

Telecom has long been a paradox — staggeringly data-rich yet insight-poor. TLMs like SoftBank’s TLM, Tech Mahindra’s AI engines, and powerhouse newcomers like TSLAM-4B are flipping that script.

They promise networks that heal themselves, customers served by AI agents who truly get telecom, and planners who invest dollars guided by predictive clarity, not guesswork.

Sure, it’s early days. Risks from explainability to runaway automation loom large (just as in any AI frontier). But one thing’s clear: telcos finally have a breed of AI that speaks their language — not just fluent in text, but fluent in towers, traffic, and topology.

And that? Might just be the biggest telecom leap since the first cellular handshake.

Connect to unlock exclusive insights, smart AI tools, and real connections that spark action.

Schedule a chat to unlock the full experience